How to Create RAID Arrays in Linux with Mdadm 22.04

Here you will find out:

- how to create RAID array using mdadm

- how DiskInternals can help you with RAID server data recovery

The mdadm utility can be used to create and manage storage arrays by utilizing Linux's software RAID capabilities. When it comes to coordinating individual storage devices and creating logical storage devices with improved performance or redundancy, administrators have a lot of flexibility.

We'll go through a variety of RAID setups that may be set up on an Ubuntu 16.04 server in this post.

Prepare the disks

At least two drives ought to be ready to use and set up. Also, be careful to disregard anything on them. They will be deleted. Additionally, ensure sure you have no concern for the data integrity that will be stored on the RAID 0 drive. Speed is a benefit of RAID 0, but that's about all. All of your data is lost if a drive dies.

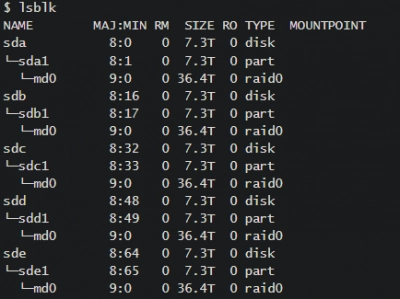

List every component of the system:

$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 7.3T 0 disk

└─sda1 8:1 0 7.3T 0 part /mnt/mydrive

sdb 8:16 0 7.3T 0 disk

sdc 8:32 0 7.3T 0 disk

sdd 8:48 0 7.3T 0 disk

sde 8:64 0 7.3T 0 disk

nvme0n1 259:0 0 7.3T 0 disk

└─nvme0n1p1 259:1 0 7.3T 0 part /

You need to make sure all the drives that will be part of the array are partition-free:

$ sudo umount /dev/sda?; sudo wipefs --all --force /dev/sda?; sudo wipefs --all --force /dev/sda

$ sudo umount /dev/sdb?; sudo wipefs --all --force /dev/sdb?; sudo wipefs --all --force /dev/sdb

Check to make sure nothing's mounted!

Ready to get your data back?

To start recovering your data, documents, databases, images, videos, and other files from your RAID 0, RAID 1, 0+1, 1+0, 1E, RAID 4, RAID 5, 50, 5EE, 5R, RAID 6, RAID 60, RAIDZ, RAIDZ2, and JBOD, press the FREE DOWNLOAD button to get the latest version of DiskInternals RAID Recovery® and begin the step-by-step recovery process. You can preview all recovered files absolutely for free. To check the current prices, please press the Get Prices button. If you need any assistance, please feel free to contact Technical Support. The team is here to help you get your data back!

Partition the disks with sgdisk

You could interactively do this with gdisk, but I like more automation, so I use sgdisk. If it's not installed, and you're on a Debian-like distro, install it: sudo apt install -y gdisk.

sudo sgdisk -n 1:0:0 /dev/sda

sudo sgdisk -n 1:0:0 /dev/sdb

Do that for each of the drives.

How to reset existing RAID devices

In this tutorial, we'll go through how to build various RAID levels. If you want to follow along, you should probably repurpose your storage devices after each section. Read this section to learn how to quickly reset your component storage devices before testing a new RAID level. Until you have created some arrays, skip this section.

Note: the array and any data put to it will be totally destroyed by this operation. Before deleting an array, make sure you're working with the right one and that you've copied off any data you need to save.

Find the active arrays in the /proc/mdstat file by typing:

cat /proc/mdstat

Output

Personalities : [raid0] [linear] [multipath] [raid1] [raid6] [raid5] [raid4] [raid10]

md0 : active raid0 sdc[1] sdd[0]

209584128 blocks super 1.2 512k chunks

unused devices: none

Unmount the array from the filesystem:

sudo umount /dev/md0

Then, stop and remove the array by typing:

sudo mdadm --stop /dev/md0

sudo mdadm --remove /dev/md0

Use the following command to find the devices that were utilized to create the array:

Note: keep in mind that the /dev/sd* names can change any time you reboot! Check them every time to make sure you are operating on the correct devices.

lsblk -o NAME,SIZE,FSTYPE,TYPE,MOUNTPOINT

NAME SIZE FSTYPE TYPE MOUNTPOINT

sda 100G disk

sdb 100G disk

sdc 100G linux_raid_member disk

sdd 100G linux_raid_member disk

vda 20G disk

├─vda1 20G ext4 part /

└─vda15 1M part

After you've discovered the devices that made up an array, return them to normal by zeroing their superblock:

sudo mdadm --zero-superblock /dev/sdc

sudo mdadm --zero-superblock /dev/sdd

Any permanent references to the array should be removed. Comment out or delete the reference to your array from the /etc/fstab file:

sudo nano /etc/fstab

/dev/md0 /mnt/md0 ext4 defaults,nofail,discard 0 0

Also, comment out or remove the array definition from the /etc/mdadm/mdadm.conf file:

sudo nano /etc/mdadm/mdadm.conf

ARRAY /dev/md0 metadata=1.2 name=mdadmwrite:0 UUID=7261fb9c:976d0d97:30bc63ce:85e76e91

Finally, update the initramfs again:

sudo update-initramfs -u

You should be able to utilize the storage devices separately or as parts of a different array at this point.

How to create RAID 0 Array

The RAID 0 array operates by chunking data and striping it over all accessible drives. This means that each disk includes a piece of the data and that information will be retrieved from many drives.

- Requirements: minimum of 2 storage devices

- Primary benefit: Performance

- Keep in mind the following: Make sure you have working backups. A single device failure will wipe out the entire array's data.

Identify the Component Devices

To get started, find the identifiers for the raw disks that you will be using:

lsblk -o NAME,SIZE,FSTYPE,TYPE,MOUNTPOINT

NAME SIZE FSTYPE TYPE MOUNTPOINT

sda 100G disk

sdb 100G disk

vda 20G disk

├─vda1 20G ext4 part /

└─vda15 1M part

In the figure above, you can see that we have two 100G disks without a filesystem. For this session, these devices have been given the IDs /dev/sda and /dev/sdb. These are the basic components that will make up the array.

Create the Array

Use the mdadm —create command to construct a RAID 0 array using these components. You must enter the device name (in our example, /dev/md0), the RAID level, and the number of devices to create:

sudo mdadm --create --verbose /dev/md0 --level=0 --raid-devices=2 /dev/sda /dev/sdb

You can ensure that the RAID was successfully created by checking the /proc/mdstat file:

cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md0 : active raid0 sdb[1] sda[0]

209584128 blocks super 1.2 512k chunks

unused devices: none

As you can see in the highlighted line, the /dev/md0 device has been created in the RAID 0 configuration using the /dev/sda and /dev/sdb devices.

Create and Mount the Filesystem

Next, create a filesystem on the array:

sudo mkfs.ext4 -F /dev/md0

Create a mount point to attach the new filesystem:

sudo mkdir -p /mnt/md0

You can mount the filesystem by typing:

sudo mount /dev/md0 /mnt/md0

Check whether the new space is available by typing:

df -h -x devtmpfs -x tmpfs

Filesystem Size Used Avail Use% Mounted on

/dev/vda1 20G 1.1G 18G 6% /

/dev/md0 197G 60M 187G 1% /mnt/md0

The new filesystem is mounted and accessible.

Save the Array Layout

We'll need to change the /etc/mdadm/mdadm.conf file to ensure that the array is reconstructed automatically upon startup. You may scan the current array and add the file automatically by typing:

sudo mdadm --detail --scan | sudo tee -a /etc/mdadm/mdadm.conf

Afterwards, you can update the initramfs, or initial RAM file system, so that the array will be available during the early boot process:

sudo update-initramfs -u

Add the new filesystem mount options to the /etc/fstab file for automatic mounting at boot:

echo '/dev/md0 /mnt/md0 ext4 defaults,nofail,discard 0 0' | sudo tee -a /etc/fstab

Your RAID 0 array should now automatically be assembled and mounted each boot.

How to create RAID 1 Array

RAID 1 is a type of array that mirrors data across all accessible drives. In a RAID 1 array, each disk receives a complete duplicate of the data, ensuring redundancy in the case of a device failure.

- Minimum of two storage devices are required.

- Main advantage: redundancy

- Keep in mind that while two copies of the data are kept, only half of the disk space will be available.

Identify the Component Devices

To begin, locate the IDs for the raw disks you'll be using:

sudo mount /dev/md0 /mnt/md0

lsblk -o NAME,SIZE,FSTYPE,TYPE,MOUNTPOINT

NAME SIZE FSTYPE TYPE MOUNTPOINT

sda 100G disk

sdb 100G disk

vda 20G disk

├─vda1 20G ext4 part /

└─vda15 1M part

As you can see in the diagram above, we have two 100G drives without a filesystem. These devices have been assigned the IDs /dev/sda and /dev/sdb for this session in this example. These are the raw materials that will be used to construct the array.

Ready to get your data back?

To start recovering your data, documents, databases, images, videos, and other files from your RAID 0, RAID 1, 0+1, 1+0, 1E, RAID 4, RAID 5, 50, 5EE, 5R, RAID 6, RAID 60, RAIDZ, RAIDZ2, and JBOD, press the FREE DOWNLOAD button to get the latest version of DiskInternals RAID Recovery® and begin the step-by-step recovery process. You can preview all recovered files absolutely for free. To check the current prices, please press the Get Prices button. If you need any assistance, please feel free to contact Technical Support. The team is here to help you get your data back!

Create the Array

Use the mdadm —create command to construct a RAID 1 array using these components. You must enter the device name (in our example, /dev/md0), the RAID level, and the number of devices to create:

sudo mdadm --create --verbose /dev/md0 --level=1 --raid-devices=2 /dev/sda /dev/sdb

If the component devices you are using are not partitions with the boot flag enabled, you will likely be given the following warning. It is safe to type y to continue:

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 104792064K

Continue creating array? y

The mdadm tool will start to mirror the drives. This can take some time to complete, but the array can be used during this time. You can monitor the progress of the mirroring by checking the /proc/mdstat file:

cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md0 : active raid1 sdb[1] sda[0]

104792064 blocks super 1.2 [2/2] [UU]

[====>................] resync = 20.2% (21233216/104792064) finish=6.9min speed=199507K/sec

unused devices: none

The /dev/md0 device has been established in the RAID 1 configuration utilizing the /dev/sda and /dev/sdb devices, as seen in the first highlighted line. The progress on the mirroring may be seen in the second highlighted line. While this procedure is running, you may continue reading the tutorial.

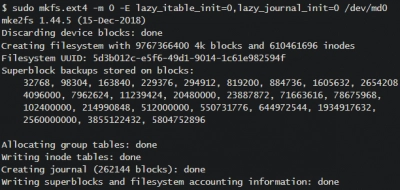

Format the array

Lazy initialization is utilized in this illustration to circumvent the (very lengthy) process of initializing all the inodes. There's no practical need to carry out huge arrays the "normal"/non-lazy method, especially with brand-new disks that aren't jam-packed with outdated content.

Mount the array

Checking on our array with lsblk now, we can see all the members of md0:

Now make a mount point and mount the volume:

$ sudo mkdir /mnt/raid0

$ sudo mount /dev/md0 /mnt/raid0

Create and Mount the Filesystem

Next, create a filesystem on the array:

sudo mkfs.ext4 -F /dev/md0

Create a mount point to attach the new filesystem:

sudo mkdir -p /mnt/md0

You can mount the filesystem by typing:

sudo mount /dev/md0 /mnt/md0

Check whether the new space is available by typing:

df -h -x devtmpfs -x tmpfs

Filesystem Size Used Avail Use% Mounted on

/dev/vda1 20G 1.1G 18G 6% /

/dev/md0 99G 60M 94G 1% /mnt/md0

The new filesystem is mounted and accessible.

Save the Array Layout

To make sure that the array is reassembled automatically at boot, we will have to adjust the /etc/mdadm/mdadm.conf file. You can automatically scan the active array and append the file by typing:

sudo mdadm --detail --scan | sudo tee -a /etc/mdadm/mdadm.conf

Afterwards, you can update the initramfs, or initial RAM file system, so that the array will be available during the early boot process:

sudo update-initramfs -u

Add the new filesystem mount options to the /etc/fstab file for automatic mounting at boot:

echo '/dev/md0 /mnt/md0 ext4 defaults,nofail,discard 0 0' | sudo tee -a /etc/fstab

Your RAID 1 array should now automatically be assembled and mounted each boot.

Creating a RAID 5 Array

The RAID 5 array type is implemented by striping data across the available devices. One component of each stripe is a calculated parity block. If a device fails, the parity block and the remaining blocks can be used to calculate the missing data. The device that receives the parity block is rotated so that each device has a balanced amount of parity information.

- Requirements: minimum of 3 storage devices.

- Primary benefit: Redundancy with more usable capacity.

- Things to keep in mind: While the parity information is distributed, one disk’s worth of capacity will be used for parity. RAID 5 can suffer from very poor performance when in a degraded state.

Identify the Component Devices

To get started, find the identifiers for the raw disks that you will be using:

lsblk -o NAME,SIZE,FSTYPE,TYPE,MOUNTPOINT

NAME SIZE FSTYPE TYPE MOUNTPOINT

sda 100G disk

sdb 100G disk

sdc 100G disk

vda 20G disk

├─vda1 20G ext4 part /

└─vda15 1M part

As you can see in the diagram above, we have three 100G drives without a filesystem. For this session, these devices have been given the identifiers /dev/sda, /dev/sdb, and /dev/sdc. These are the raw materials that will be used to construct the array.

Create the Array

Use the mdadm —create command to construct a RAID 5 array using these components. You must enter the device name (in our example, /dev/md0), the RAID level, and the number of devices to create:

sudo mdadm --create --verbose /dev/md0 --level=5 --raid-devices=3 /dev/sda /dev/sdb /dev/sdc

The array will be configured via the mdadm utility (it actually uses the recovery process to build the array for performance reasons). While this may take some time, the array can be utilized in the meanwhile. Check the /proc/mdstat file to see how far the mirroring has progressed:

cat /proc/mdstat

Personalities : [raid1] [linear] [multipath] [raid0] [raid6] [raid5] [raid4] [raid10]

md0 : active raid5 sdc[3] sdb[1] sda[0]

209584128 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [UU_]

[===>.................] recovery = 15.6% (16362536/104792064) finish=7.3min speed=200808K/sec

The /dev/md0 device has been created in the RAID 5 configuration utilizing the /dev/sda, /dev/sdb, and /dev/sdc devices, as seen in the first highlighted line. The second highlighted line depicts the construction progress. You can continue the guide while this process completes.

Create and Mount the Filesystem

Next, create a filesystem on the array:

sudo mkfs.ext4 -F /dev/md0

Create a mount point to attach the new filesystem:

sudo mkdir -p /mnt/md0

You can mount the filesystem by typing:

sudo mount /dev/md0 /mnt/md0

Check whether the new space is available by typing:

df -h -x devtmpfs -x tmpfs

Filesystem Size Used Avail Use% Mounted on

/dev/vda1 20G 1.1G 18G 6% /

/dev/md0 197G 60M 187G 1% /mnt/md0

The new filesystem is mounted and accessible.

Save the Array Layout

To make sure that the array is reassembled automatically at boot, we will have to adjust the /etc/mdadm/mdadm.conf file.

Check that the array has finished building before making any changes to the settings. Because of the way mdadm generates RAID 5 arrays, the number of spares in the array will be displayed incorrectly if the array is still being built:

cat /proc/mdstat

Personalities : [raid1] [linear] [multipath] [raid0] [raid6] [raid5] [raid4] [raid10]

md0 : active raid5 sdc[3] sdb[1] sda[0]

209584128 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

unused devices: none

The output above shows that the rebuild is complete. Now, we can automatically scan the active array and append the file by typing:

sudo mdadm --detail --scan | sudo tee -a /etc/mdadm/mdadm.conf

Afterwards, you can update the initramfs, or initial RAM file system, so that the array will be available during the early boot process:

sudo update-initramfs -u

Add the new filesystem mount options to the /etc/fstab file for automatic mounting at boot:

echo '/dev/md0 /mnt/md0 ext4 defaults,nofail,discard 0 0' | sudo tee -a /etc/fstab

Your RAID 5 array should now automatically be assembled and mounted each boot.

Ready to get your data back?

To start recovering your data, documents, databases, images, videos, and other files from your RAID 0, RAID 1, 0+1, 1+0, 1E, RAID 4, RAID 5, 50, 5EE, 5R, RAID 6, RAID 60, RAIDZ, RAIDZ2, and JBOD, press the FREE DOWNLOAD button to get the latest version of DiskInternals RAID Recovery® and begin the step-by-step recovery process. You can preview all recovered files absolutely for free. To check the current prices, please press the Get Prices button. If you need any assistance, please feel free to contact Technical Support. The team is here to help you get your data back!

Creating a RAID 6 Array

Striping data among available devices is how the RAID 6 array is accomplished. Calculated parity blocks make up two of each stripe's components. The parity blocks and the remaining blocks can be used to calculate the missing data if one or two devices fail. The devices that get the parity blocks are cycled to ensure that each device receives an equal quantity of parity data. This is similar to a RAID 5 array, but allows for the failure of two drives.

- Requirements: minimum of 4 storage devices.

- Primary benefit: Double redundancy with more usable capacity.

- Things to keep in mind: While the parity information is distributed, two disk’s worth of capacity will be used for parity. RAID 6 can suffer from very poor performance when in a degraded state.

Identify the Component Devices

To get started, find the identifiers for the raw disks that you will be using:

lsblk -o NAME,SIZE,FSTYPE,TYPE,MOUNTPOINT

NAME SIZE FSTYPE TYPE MOUNTPOINT

sda 100G disk

sdb 100G disk

sdc 100G disk

vda 20G disk

├─vda1 20G ext4 part /

└─vda15 1M part

As you can see above, we have four disks without a filesystem, each 100G in size. In this example, these devices have been given the /dev/sda, /dev/sdb, /dev/sdc, and /dev/sdd identifiers for this session. These will be the raw components we will use to build the array.

Create the Array

To create a RAID 6 array with these components, pass them in to the mdadm --create command. You will have to specify the device name you wish to create (/dev/md0 in our case), the RAID level, and the number of devices:

sudo mdadm --create --verbose /dev/md0 --level=6 --raid-devices=4 /dev/sda /dev/sdb /dev/sdc /dev/sdd

The mdadm tool will start to configure the array (it actually uses the recovery process to build the array for performance reasons). This can take some time to complete, but the array can be used during this time. You can monitor the progress of the mirroring by checking the /proc/mdstat file:

cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4] [linear] [multipath] [raid0] [raid1] [raid10]

md0 : active raid6 sdd[3] sdc[2] sdb[1] sda[0]

209584128 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/4] [UUUU]

[>....................] resync = 0.6% (668572/104792064) finish=10.3min speed=167143K/sec

unused devices: none

As you can see in the first highlighted line, the /dev/md0 device has been created in the RAID 6 configuration using the /dev/sda, /dev/sdb, /dev/sdc and /dev/sdd devices. The second highlighted line shows the progress on the build. You can continue the guide while this process completes.

Create and Mount the Filesystem

Next, create a filesystem on the array:

sudo mkfs.ext4 -F /dev/md0

Create a mount point to attach the new filesystem:

sudo mkdir -p /mnt/md0

You can mount the filesystem by typing:

sudo mount /dev/md0 /mnt/md0

Check whether the new space is available by typing:

df -h -x devtmpfs -x tmpfs

Filesystem Size Used Avail Use% Mounted on

/dev/vda1 20G 1.1G 18G 6% /

/dev/md0 197G 60M 187G 1% /mnt/md0

The new filesystem is mounted and accessible.

Save the Array Layout

We'll need to change the /etc/mdadm/mdadm.conf file to ensure that the array is reassembled automatically at boot. We may scan the active array and add the file automatically by typing:

sudo mdadm --detail --scan | sudo tee -a /etc/mdadm/mdadm.conf

Afterwards, you can update the initramfs, or initial RAM file system, so that the array will be available during the early boot process:

sudo update-initramfs -u

Add the new filesystem mount options to the /etc/fstab file for automatic mounting at boot:

echo '/dev/md0 /mnt/md0 ext4 defaults,nofail,discard 0 0' | sudo tee -a /etc/fstab

Your RAID 6 array should now automatically be assembled and mounted each boot.

Creating a Complex RAID 10 Array

Traditionally, a striped RAID 0 array made up of sets of RAID 1 arrays has been used to produce RAID 10. This sort of layered array provides redundancy and great performance at the cost of a lot of disk space. The mdadm utility has its own RAID 10 kind, which offers the same benefits as RAID 10 but with more flexibility. Although it is not made up of nested arrays, it has many of the same traits and guarantees. The mdadm RAID 10 will be used here.

- Minimum of three storage devices are required.

- Primary advantage: Redundancy and performance

- Consider the following: The number of data copies you choose to keep determines the amount of capacity reduction for the array. With mdadm style RAID 10, the number of copies saved is customizable.

In what is known as the "near" arrangement, two copies of each data block are kept by default. The following are the different layouts that determine how each data block is stored:

- close: The default configuration. When striping, copies of each chunk are written sequentially, which means that copies of data blocks are written around the same portion of different disks.

- far: The first and subsequent copies are written to various portions the storage devices in the array. For example, the first chunk may be written towards the start of a disk, whereas the second piece could be written halfway down another drive. For typical spinning disks, this can result in some read speed increases at the price of write performance.

- Each stripe is replicated with one drive offset. This signifies that the copies are offset from one another on the disk, but they are still near together. During some workloads, this helps to reduce excessive seeking.

You can find out more about these layouts by checking out the “RAID10” section of this man page:

man 4 md

Identify the Component Devices

To get started, find the identifiers for the raw disks that you will be using:

lsblk -o NAME,SIZE,FSTYPE,TYPE,MOUNTPOINT

NAME SIZE FSTYPE TYPE MOUNTPOINT

sda 100G disk

sdb 100G disk

sdc 100G disk

vda 20G disk

├─vda1 20G ext4 part /

─vda15 1M part

As you can see in the diagram above, we have four 100G drives without a filesystem. For this session, these devices have been assigned the IDs /dev/sda, /dev/sdb, /dev/sdc, and /dev/sdd. These are the raw materials that will be used to construct the array.

Create the Array

Use the mdadm —create command to construct a RAID 10 array using these components. You must enter the device name (in our example, /dev/md0), the RAID level, and the number of devices to construct.

You can set up two copies using the near layout by not specifying a layout and copy number:

sudo mdadm --create --verbose /dev/md0 --level=10 --raid-devices=4 /dev/sda /dev/sdb /dev/sdc /dev/sdd

You must use the —layout= option, which accepts a layout and copy identifier, if you wish to use a different layout or adjust the number of copies. Near, far, and offset are represented by the letters n, f, and o, respectively. After that, the number of copies to keep is added.

For example, to build an array with three copies in the offset layout, use the following command:

sudo mdadm --create --verbose /dev/md0 --level=10 --layout=o3 --raid-devices=4 /dev/sda /dev/sdb /dev/sdc /dev/sdd

The mdadm tool will start to configure the array (it actually uses the recovery process to build the array for performance reasons). This can take some time to complete, but the array can be used during this time. You can monitor the progress of the mirroring by checking the /proc/mdstat file:

cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4] [linear] [multipath] [raid0] [raid1] [raid10]

md0 : active raid10 sdd[3] sdc[2] sdb[1] sda[0]

209584128 blocks super 1.2 512K chunks 2 near-copies [4/4] [UUUU]

[===>.................] resync = 18.1% (37959424/209584128) finish=13.8min speed=206120K/sec

The /dev/md0 device has been created in the RAID 10 configuration utilizing the /dev/sda, /dev/sdb, /dev/sdc, and /dev/sdd devices, as seen in the first highlighted line. The layout for this example is seen in the second highlighted area (2 copies in the near configuration). The third highlighted section depicts the construction progress. While this procedure is running, you may continue reading the tutorial.

Create and Mount the Filesystem

Next, create a filesystem on the array:

sudo mkfs.ext4 -F /dev/md0

Create a mount point to attach the new filesystem:

sudo mkdir -p /mnt/md0

You can mount the filesystem by typing:

sudo mount /dev/md0 /mnt/md0

Check whether the new space is available by typing:

df -h -x devtmpfs -x tmpfs

Filesystem Size Used Avail Use% Mounted on

/dev/vda1 20G 1.1G 18G 6% /

/dev/md0 197G 60M 187G 1% /mnt/md0

The new filesystem is mounted and accessible.

Save the Array Layout

To make sure that the array is reassembled automatically at boot, we will have to adjust the /etc/mdadm/mdadm.conf file. We can automatically scan the active array and append the file by typing:

sudo mdadm --detail --scan | sudo tee -a /etc/mdadm/mdadm.conf

Afterwards, you can update the initramfs, or initial RAM file system, so that the array will be available during the early boot process:

sudo update-initramfs -u

Add the new filesystem mount options to the /etc/fstab file for automatic mounting at boot:

echo '/dev/md0 /mnt/md0 ext4 defaults,nofail,discard 0 0' | sudo tee -a /etc/fstab

Your RAID 10 array should now automatically be assembled and mounted each boot. Learn how to Remove software RAID device using mdadm!

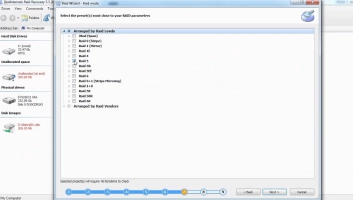

Steps to Use DiskInternals RAID Recovery

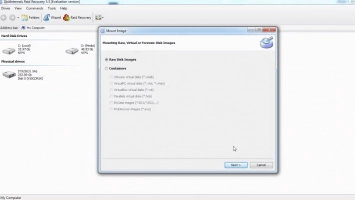

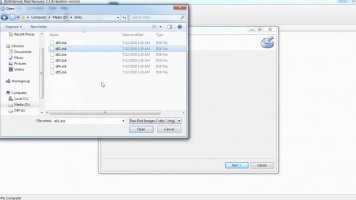

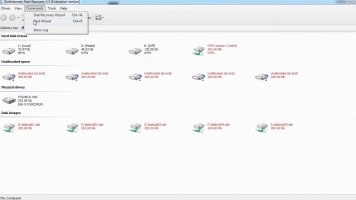

Recovering files with DiskInternals RAID Recovery is a straightforward process that can be done manually or following the on-screen instruction of the Recovery Wizard.

First Step:

Download and install RAID Recovery on the Windows OS system. This software is compatible with Windows 7/8/8.0/10/-11 and Windows Server 2003-2019.

Second Step:

Launch the app after installation and select the affected target array. Next, choose a recovery mode:

- Fast recovery mode

- Full recovery mode

The Fast Recovery Mode scans very fast and saves time, but it doesn’t get deeper to discover all the lost files. Full Recovery Mode takes time and retrieves all lost files.

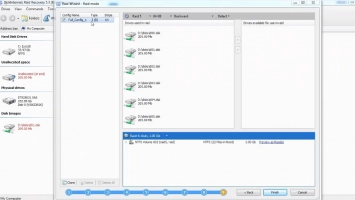

Third Step:

DiskInternals RAID Recovery would automatically check the status of the selected RAID array, controller, file system, or disk to recover lost files. You can preview the recovered files before proceeding to save them back to your local storage. To save the recovered files, you need to purchase a license.

Is the damage too deep/severe for you to restore? You can request guided assistance from RAID Recovery experts. Learn more about software RAID vs hardware RAID recovery here!

Recovery Tips:

- Follow the recovery steps diligently - wait until each step executes successfully before proceeding to the next; don’t hurry!

- Make sure you select the right drive for the scan; else, the program won't find the file(s) you want to recover.

- Preview the files before attempting final recovery.

- Don't re-save the recovered data on the same drive where it was deleted/lost.

Video Guide On ZFS Recovery Process

Here’s a video guide to clarify the entire steps explained above; watch to understand better. With the best software RAID recovery is easy: